@mark-robustelli Thank you for the quick response. I appreciate you taking the time to consider the issue.

You raise a valid point about the possibility of pod issues. To clarify, here's additional context and observations:

Relevant Pods: The specific pods attempting to communicate with FusionAuth remain consistently up and healthy throughout the periods where FusionAuth becomes unreachable.

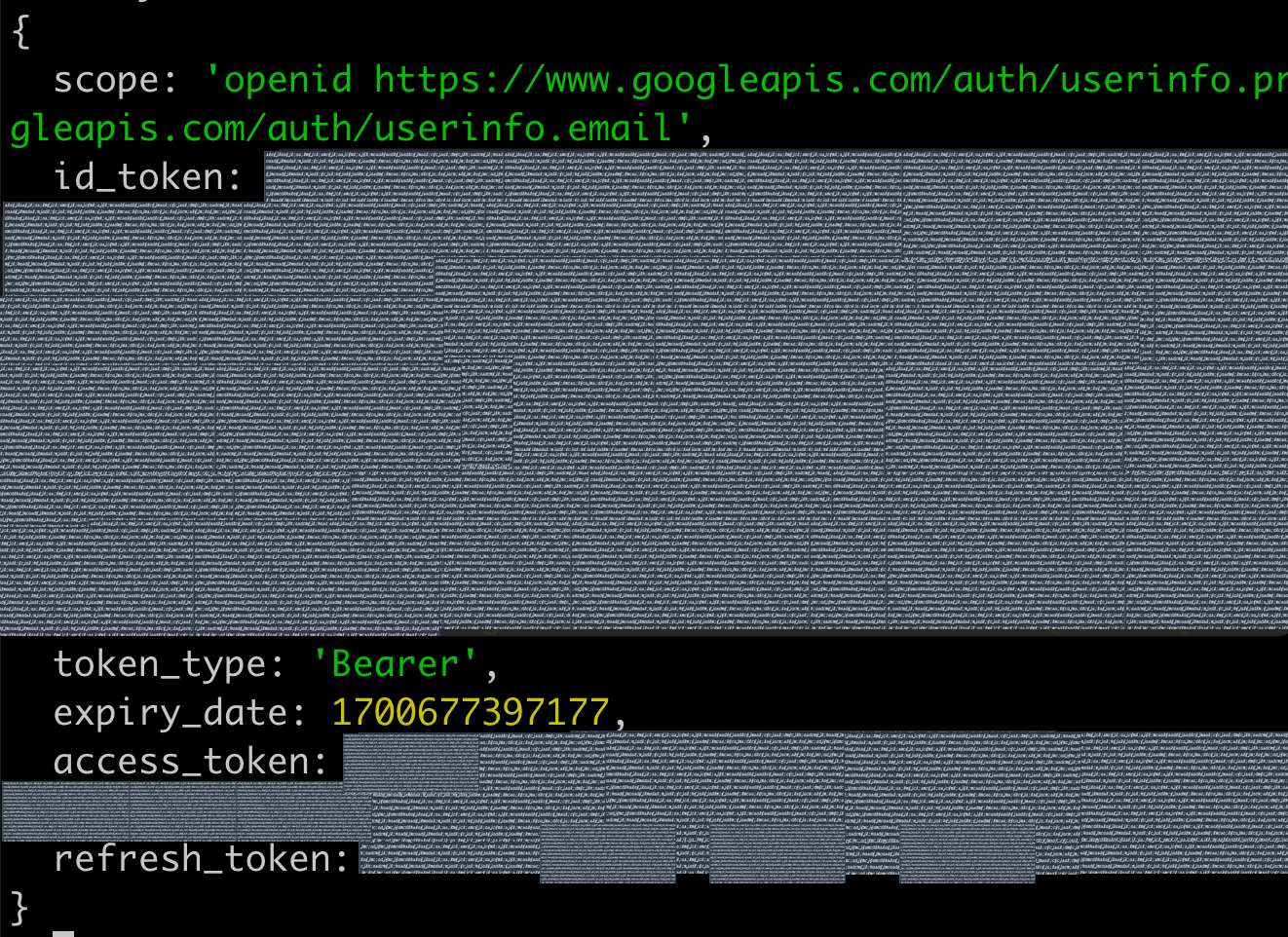

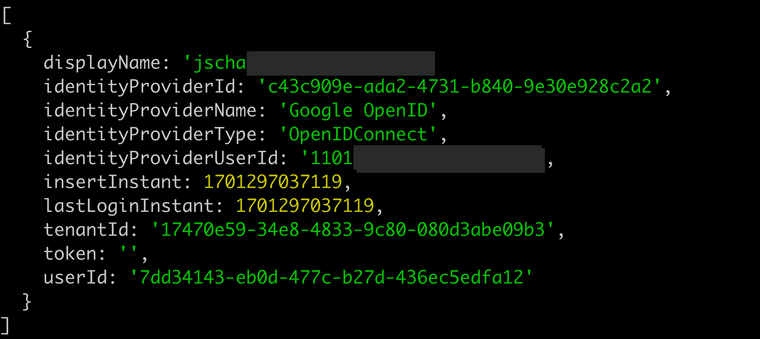

External Connectivity: Successful communication with external services like Google and Gravatar demonstrates that broader network connectivity from our pods is unaffected.

Dedicated Service: FusionAuth is a separate, dedicated service. The issue lies in our GKE cluster's ability to reach it sporadically.

Given this additional information, I'm now leaning towards these potential areas for investigation:

GKE Egress Rules: I have meticulously examined firewall rules and configurations within GKE that might selectively block traffic to FusionAuth. It's possible a misconfiguration could be causing this intermittent issue. However it seems unlikely as it is intermittent. It also doesn't happen after a deploy or anythign related to GKE availability.

FusionAuth Ingress Rules: I have double-checked the FusionAuth server's settings to ensure there aren't any firewall or IP-based restrictions accidentally preventing connections originating from our GKE cluster. There was one that i saw it went down and I added an allowed IP at the time. It didn't immediately solve it but it did resolve within the next few minutes.

Next Steps:

Would you have any additional guidance or specific areas I should focus on? Any insights on potential pitfalls in GKE's network setup or FusionAuth's configuration that might cause this behavior would be greatly appreciated.

The fact that it is intermittent is a problem that makes this difficult to solve.

Thanks again!